Global Economy Posts on Crowch

In June 2025, Canada will host the annual G7 Summit, one of the most influential gatherings of global leaders. The heads of the seven largest advanced economies will come together to address pressing global issues, from climate and security to artificial intelligence and economic recovery.

What Is the G7

The Group of Seven includes: Canada, the United States, the United Kingdom, Germany, France, Italy, and Japan. Together, these countries represent roughly 40% of global GDP and lead in innovation, finance, trade, and diplomacy.

The G7 is known for its informal and direct approach. Unlike formal organizations, it has no permanent headquarters or binding agreements. Instead, it enables open dialogue and rapid response to global developments.

Key Topics in 2025

- Global economy & inflation: coordinated efforts to curb inflation, support supply chains, reduce inequality

- Security & geopolitics: war in Ukraine, policy alignment on China, cyber defense strategies

- Climate change: decarbonization targets, green investment plans, energy transition

- Artificial intelligence & tech: regulating AI, safeguarding data, strengthening digital infrastructure

- Global health: pandemic preparedness, post-COVID reforms, aid to low-income nations

Special attention will also be paid to partnerships with emerging countries in Africa and Asia, as well as reinforcing global institutions such as the WHO, WTO, and IMF.

Why It Matters

The G7 is where global priorities are shaped. Initiatives launched here often guide larger platforms like the G20 or the UN. As host, Canada aims to highlight democratic unity, responsible leadership, and cooperation in times of global tension.

The 2025 summit will take place against a backdrop of uncertainty — geopolitical instability, climate crises, and rapid tech disruption. The decisions made in Canada will influence international policies and potentially reshape the global order.

In recent years, the word "ecology" has stopped being something abstract. Wildfires, droughts, abnormal rains, heatwaves in cities, disappearing forests, and shrinking rivers — we see all this not on screens, but outside our windows. Climate change is no longer a hypothesis; it’s a reality that millions of people face every day. What once seemed distant now hits close to home, affecting every aspect of modern life.

Environmental instability affects not just nature, but also the economy, politics, infrastructure, agriculture, and even mental health. Crops are failing due to heat and unpredictable seasons, which leads to food shortages and rising prices. Cities become urban heat islands, where concrete traps warmth and creates dangerous living conditions. Infrastructure that was not built to withstand these extremes begins to collapse — roads buckle, power grids overload, and buildings suffer from unexpected flooding. Access to clean, reliable water is becoming increasingly uncertain in regions that once took it for granted. In some places, communities are forced to relocate because their homes are no longer livable.

Beyond the physical consequences, there are serious psychological and emotional effects. The fear of what comes next — of an unstable future, of natural disasters, of global collapse — is growing. This is especially noticeable among the younger generation, who will inherit the consequences of today’s inaction. Psychologists now recognize "climate anxiety" as a legitimate condition: a persistent feeling of helplessness, dread, and sadness connected to ecological change. It’s not irrational — it’s a rational response to what people see and feel every day.

Meanwhile, governments and corporations often issue bold climate-related statements. Commitments to reduce emissions, transition to green energy, or preserve forests are announced at international summits and celebrated in the media. But on the ground, progress is slow. Promises are postponed, diluted, or undermined by political pressure, economic interests, or lack of coordination. Many people feel disillusioned and frustrated, realizing that declarations are not the same as meaningful action.

There is a growing understanding that time is running out. The opportunity to avoid the worst impacts of climate change is still there, but it is shrinking rapidly. The coming decade is critical — not only for reducing emissions, but for fundamentally changing how societies function. The world is entering an era of climate responsibility, and each of us plays a role in what that looks like.

The solution is not only about innovation and technology — though clean energy, carbon capture, and sustainable agriculture are all vital. It’s also about our daily choices. What we eat, how we travel, what we buy, how much we waste, and who we support politically — these things matter. Small changes on a personal level, multiplied across millions of lives, can drive systemic change. But it requires honesty, courage, and commitment.

Mass awareness, collective pressure on businesses and political institutions, and a shift in mindset toward long-term, sustainable thinking are not signs of idealism. They are a necessary response to reality. This is not just a movement for environmentalists — it’s a survival strategy for all of humanity. The future of the planet depends on the choices we make today — and whether we are willing to act before it’s too late.

Back in January, when the Global Economic Forum in Singapore released its declaration on autonomous financial oversight, most of us finance nerds leaned in. The idea? That in an era of AI-powered trading, risk modeling, and decentralized finance, the biggest threat isn’t irrational investors — it’s outdated human regulation.

Fast forward to June 2025, and we’ve entered a new era: AI-managed financial governance. From monetary policy tweaks to fraud detection and even ESG enforcement, intelligent systems are now officially embedded in economic steering. And while it feels exciting — even efficient — the philosophical questions it raises are impossible to ignore.

The Birth of “Smart Regulation” — and the Quiet Death of Lag

Let’s rewind. In 2024, three major financial crises happened because regulatory action couldn’t keep up with algorithmic activity: the flash crash in Southeast Asian markets caused by mispriced carbon futures, the synthetic commodity bubble in sub-Saharan Africa, and the decentralized insurance liquidity collapse in LATAM.

In each case, AI-driven systems outpaced human oversight.

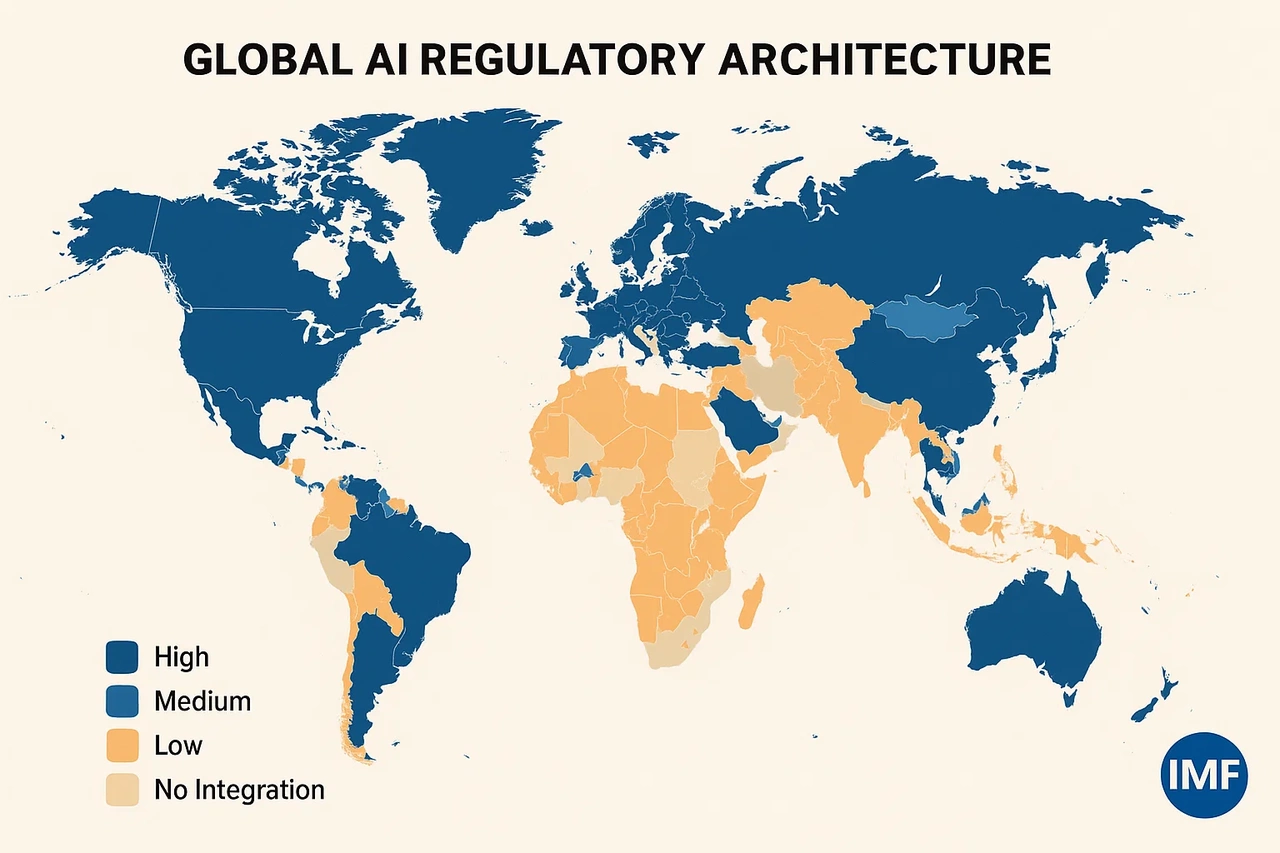

In response, countries like Germany, Singapore, and Brazil started running parallel regulatory pilots powered by machine learning. By 2025, the IMF endorsed the use of Autonomous Supervisory Systems (ASS) — yes, someone really should’ve thought that acronym through — to analyze risk exposure and suggest automatic intervention protocols for central banks.

The promise? Fewer crashes. Faster corrections. Data-driven ethics. The risk? Decisions without debate. Corrections without accountability.

Finance by the Numbers — or by the Values?

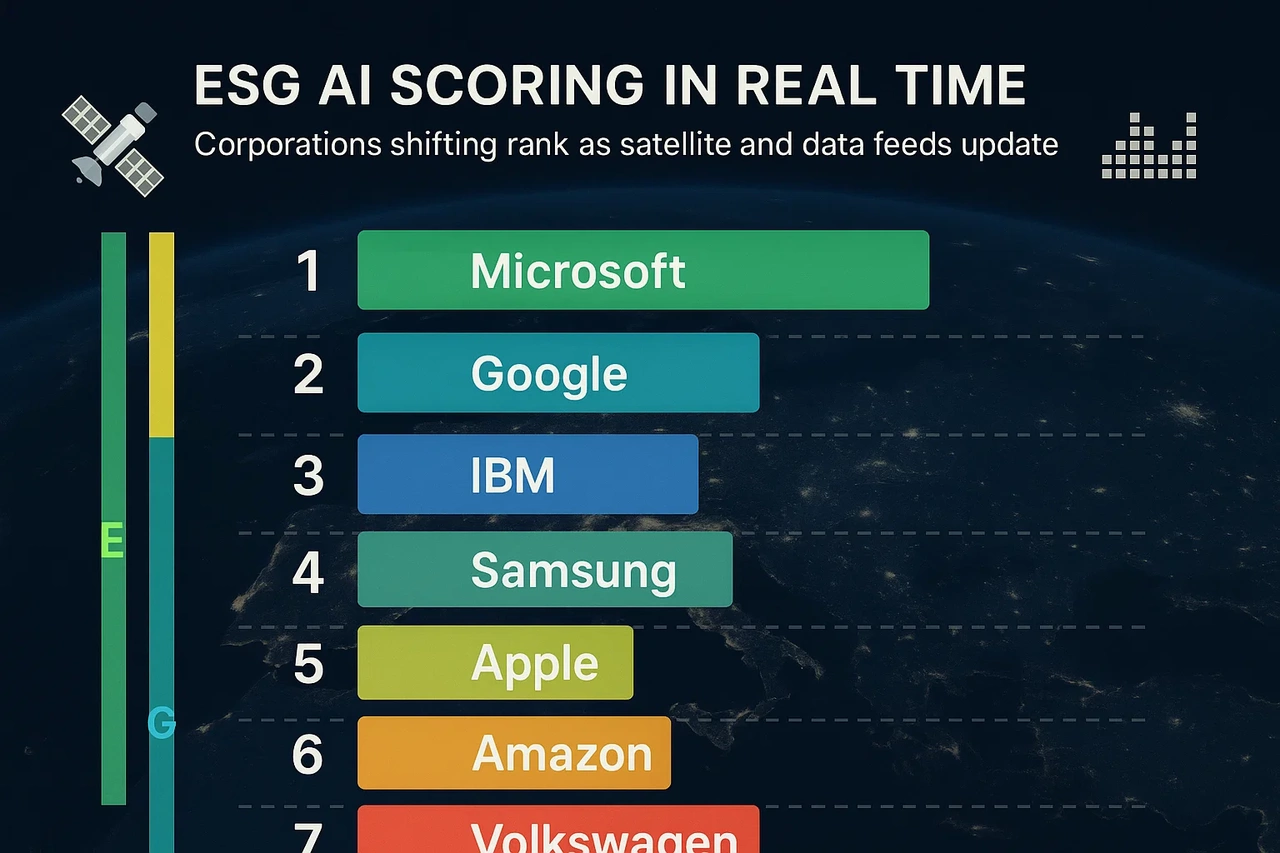

Here’s where I get both excited and uneasy. One of the most radical shifts this year was the AI-enforced ESG scoring mandate adopted in the EU and Canada. Using satellite imagery, corporate disclosures, and real-time social sentiment scraping, companies are now given dynamic ESG scores that directly affect access to loans and tax breaks.

In theory, it’s brilliant. No more greenwashing. No more lobbyist-influenced blind spots.

But I couldn’t help but think: Whose ethics are coded into this system? What happens when an algorithm penalizes a small manufacturer in Eastern Europe for labor practices based on standards it learned from Fortune 500 datasets?

We’ve created a system that’s efficient, but not always empathetic. And while it’s tempting to say "trust the data," we need to ask who’s labeling the training sets, who’s weighting the priorities, and who ultimately benefits. Because in 2025, finance is no longer just about money — it’s about algorithms enforcing morality.

The Digital Bretton Woods?

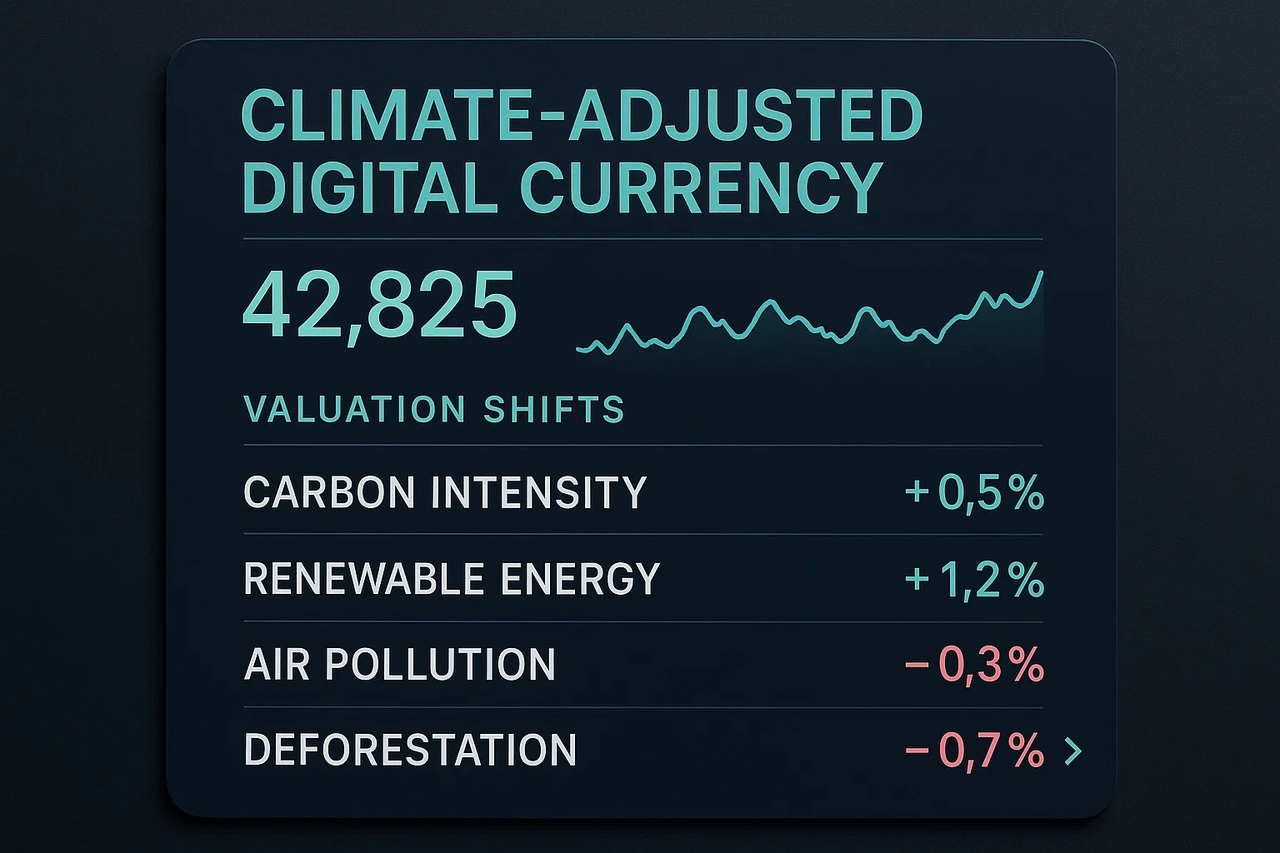

In April, I attended a virtual roundtable titled “Decoding the New Standard”, where finance ministers, AI ethicists, and token economists discussed whether we’re moving toward a digital Bretton Woods — a new global economic order shaped not by gold or GDP, but by code, consensus, and compute power. And here’s what’s wild: for the first time, digital currency frameworks are being tied to climate performance.

Imagine this — a country’s central digital currency (like the e-euro or DUSDR) can devalue if national emissions targets are exceeded, or strengthen if carbon drawdown thresholds are met.

It’s bold. It’s technocratic. And it might be the only way to force accountability into financial systems that have ignored planetary cost for decades.

But it also introduces new instability. What happens when a wildfire — a random natural disaster — tanks your national currency? Are we creating a fairer market, or just a more volatile one?

Where Does This Leave Us?

As a young guy who loves spreadsheets, market cycles, and economic theory — I’m genuinely fascinated by what’s unfolding in 2025. We’ve spent decades saying the market is rational, self-correcting, and largely ungovernable. Now we’re watching the rise of tools that actually make it governable — but in ways that often remove the human from the loop.

We’re replacing regulators with neural nets, central banks with logic chains, and policy with predictions. It’s efficient. It’s elegant. But it’s also deeply unsettling. Because economies aren’t just data. They’re people, cultures, fears, aspirations, and irrational choices. So the real question for the second half of 2025 isn’t how smart the system gets — it’s how human we allow it to remain.

The digital revolution has radically transformed the world in just a few decades. What once seemed like science fiction — instant video calls, voice-controlled devices, contactless payments, and remote work — is now part of everyday life. The internet, smartphones, and artificial intelligence have not only made tasks more convenient but have also created an entirely new reality in which we live, work, learn, and connect.

One of the most visible changes brought by technology is in communication. Where letters once took weeks and phones were attached to walls, now we can instantly send a message, start a video call, or join an international online meeting with a tap. Social media allows us to connect globally, share real-time updates, ideas, and emotions. Yet, these benefits come with challenges: digital addiction, shorter attention spans, and blurred personal boundaries are growing concerns.

Another major area of transformation is education. Online courses, remote learning platforms, video lectures, and mobile apps have made knowledge more accessible than ever. A student in a rural village can now study programming at Harvard or take a finance course from London. But this also demands new skills: the ability to evaluate information critically, stay self-motivated, and adapt to digital learning environments.

The world of work has changed no less. Remote work, freelancing, flexible hours, and automation are now common. Businesses are moving online, and new careers are emerging — from cybersecurity experts to digital marketers and AI developers. These shifts bring opportunity, but also require constant learning and the ability to adapt to rapid change.

Technology’s impact on health is another important topic. We now have apps that monitor sleep, physical activity, stress levels, and even offer telemedicine. However, issues such as sedentary lifestyles, screen fatigue, and digital dependency are on the rise. It is becoming increasingly important to use technology mindfully — setting boundaries, taking screen breaks, moving more, and prioritizing in-person connections.

In conclusion, technology has become inseparable from modern life. It brings incredible potential, but also new responsibilities. The key challenge of the 21st century is to maintain balance — using digital tools to enhance, not replace, real life. Developing this balance may be one of the most essential life skills for our generation and the ones to come.

The digital revolution has radically transformed nearly every aspect of life in just a few decades. What once belonged in the realm of science fiction — instant video calls, voice-controlled assistants, contactless payments, and global remote work — has become the fabric of our daily experience. We now live in a world where the internet, smartphones, cloud computing, and artificial intelligence have not only made tasks more convenient but have redefined how we live, work, learn, socialize, and even think.

One of the most visible and personal transformations is in communication. There was a time when sending a letter meant waiting days or weeks for a reply, and phones were landline devices shared by households. Today, with a single tap, we can message someone across the world, host real-time video calls, or share life updates with hundreds of people simultaneously via social media. These platforms have allowed us to maintain relationships, build communities, and even amplify voices in powerful social movements.

But with such connection also comes new challenges:

- Digital addiction and the compulsion to constantly check notifications

- Shortened attention spans and difficulty focusing

- Blurred boundaries between personal and professional life

- Mental health concerns, such as comparison, FOMO (fear of missing out), and online harassment

In the realm of education, technology has unlocked unprecedented access. Online courses, video tutorials, virtual classrooms, and educational apps have made learning borderless. A student in a remote village can attend a coding bootcamp in Silicon Valley, while a working adult can study psychology at Oxford from their living room. Yet this digital expansion also demands new digital literacy skills:

- The ability to evaluate sources critically

- The discipline for self-paced learning

- Comfort with digital collaboration tools

- Awareness of cybersecurity and data privacy

The world of work has evolved no less. The rise of remote work, the gig economy, and automation has reshaped traditional job structures. Many roles are now location-independent, and new professions — from AI ethics consultantsto blockchain developers — are emerging. Companies are increasingly relying on digital infrastructure, while individuals must constantly update their skill sets to remain relevant. The idea of a “job for life” is being replaced by a model of continuous growth and adaptation.

Technology’s role in health and wellness is equally transformative. Fitness trackers, sleep monitors, meditation apps, and telehealth platforms allow people to track, manage, and consult on their health like never before. We can detect irregular heart rates, count steps, manage nutrition, and access therapy — all from our phones. However, digital health also presents new risks:

- Sedentary lifestyles due to screen time

- Disrupted sleep cycles from blue light exposure

- “Zoom fatigue” and chronic screen exhaustion

- Reduced in-person social interaction

This highlights the need for digital mindfulness — the practice of using technology with intention, setting limits, unplugging regularly, and reconnecting with nature, people, and self.

In conclusion, technology is no longer a separate tool — it’s woven into the fabric of modern life. It offers immense potential to improve our lives, expand knowledge, and connect humanity. But it also asks for responsibility, discipline, and discernment. The key challenge of the 21st century is not whether we adopt new technologies — but how we integrate them without losing ourselves.

To thrive in this new era, we must develop a balanced relationship with technology — one that enhances rather than replaces real life. That balance may become one of the most essential life skills for our generation — and a legacy of wisdom we pass on to those who follow.