When the Market Thinks for Itself: AI Regulation and the Future of Finance in 2025

Back in January, when the Global Economic Forum in Singapore released its declaration on autonomous financial oversight, most of us finance nerds leaned in. The idea? That in an era of AI-powered trading, risk modeling, and decentralized finance, the biggest threat isn’t irrational investors — it’s outdated human regulation.

Fast forward to June 2025, and we’ve entered a new era: AI-managed financial governance. From monetary policy tweaks to fraud detection and even ESG enforcement, intelligent systems are now officially embedded in economic steering. And while it feels exciting — even efficient — the philosophical questions it raises are impossible to ignore.

The Birth of “Smart Regulation” — and the Quiet Death of Lag

Let’s rewind. In 2024, three major financial crises happened because regulatory action couldn’t keep up with algorithmic activity: the flash crash in Southeast Asian markets caused by mispriced carbon futures, the synthetic commodity bubble in sub-Saharan Africa, and the decentralized insurance liquidity collapse in LATAM.

In each case, AI-driven systems outpaced human oversight.

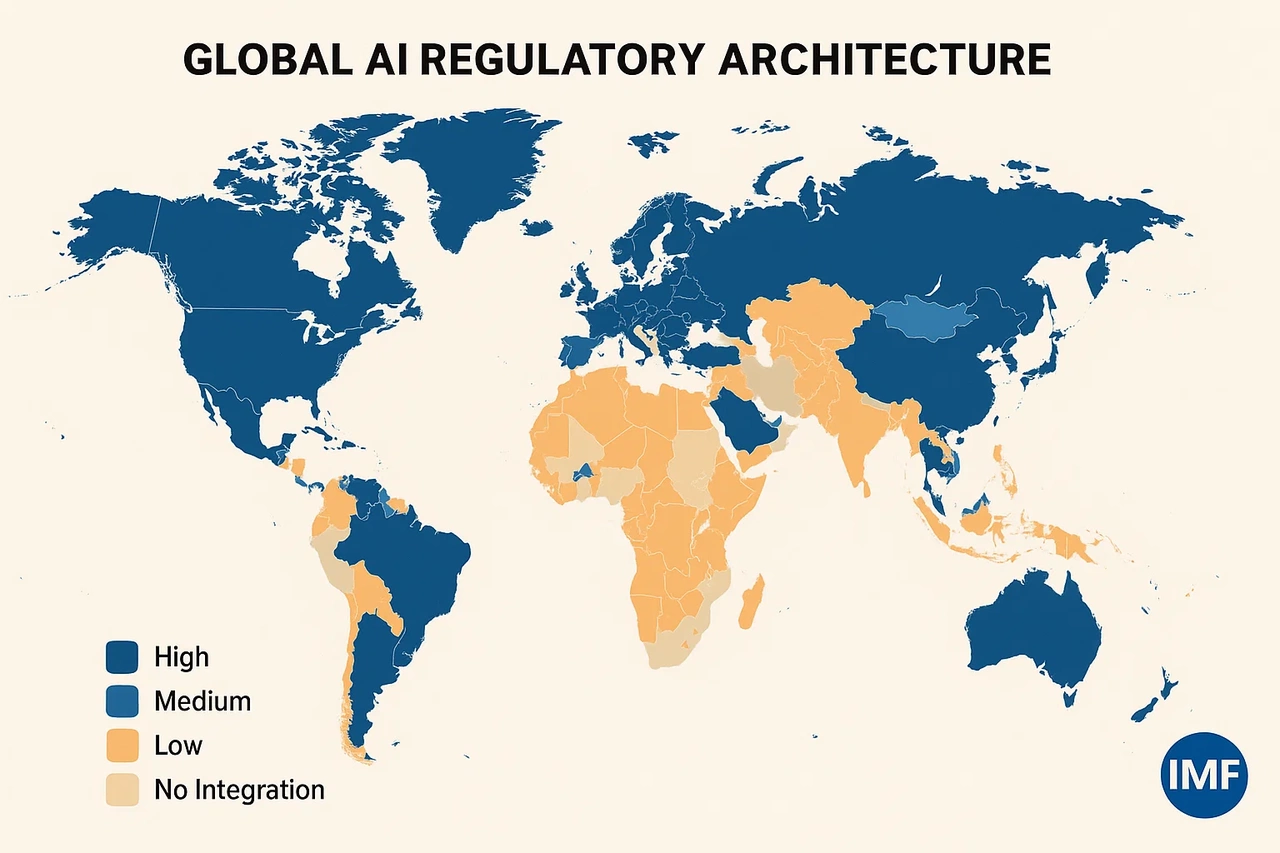

In response, countries like Germany, Singapore, and Brazil started running parallel regulatory pilots powered by machine learning. By 2025, the IMF endorsed the use of Autonomous Supervisory Systems (ASS) — yes, someone really should’ve thought that acronym through — to analyze risk exposure and suggest automatic intervention protocols for central banks.

The promise? Fewer crashes. Faster corrections. Data-driven ethics. The risk? Decisions without debate. Corrections without accountability.

Finance by the Numbers — or by the Values?

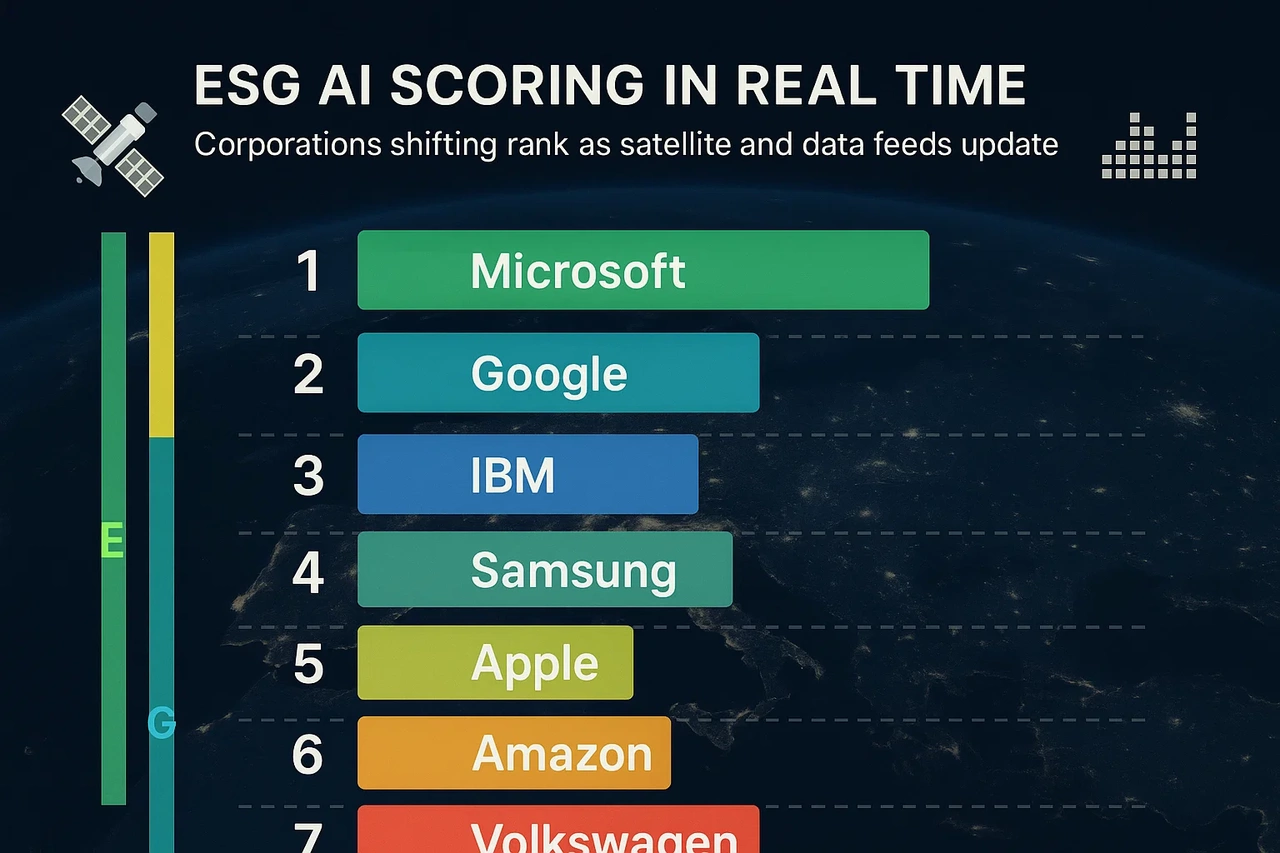

Here’s where I get both excited and uneasy. One of the most radical shifts this year was the AI-enforced ESG scoring mandate adopted in the EU and Canada. Using satellite imagery, corporate disclosures, and real-time social sentiment scraping, companies are now given dynamic ESG scores that directly affect access to loans and tax breaks.

In theory, it’s brilliant. No more greenwashing. No more lobbyist-influenced blind spots.

But I couldn’t help but think: Whose ethics are coded into this system? What happens when an algorithm penalizes a small manufacturer in Eastern Europe for labor practices based on standards it learned from Fortune 500 datasets?

We’ve created a system that’s efficient, but not always empathetic. And while it’s tempting to say "trust the data," we need to ask who’s labeling the training sets, who’s weighting the priorities, and who ultimately benefits. Because in 2025, finance is no longer just about money — it’s about algorithms enforcing morality.

The Digital Bretton Woods?

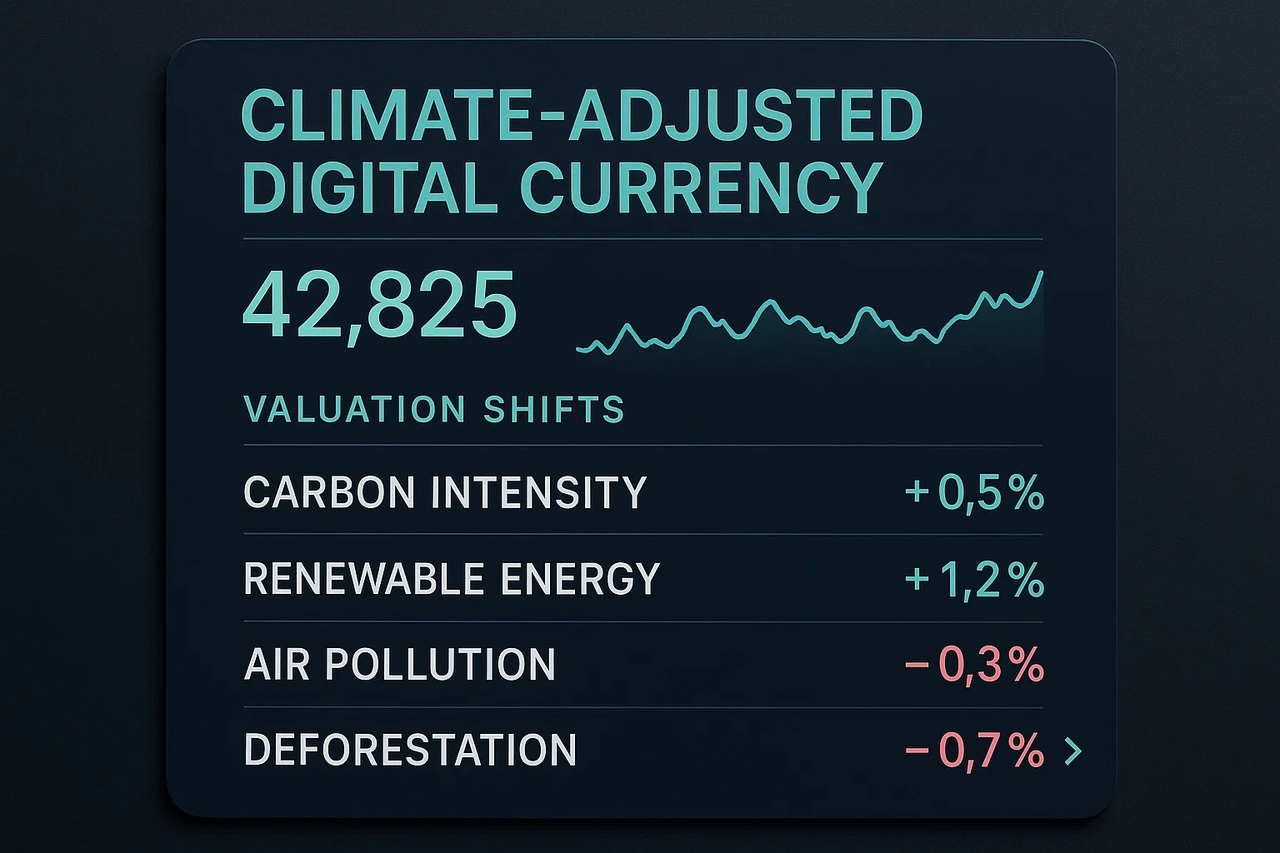

In April, I attended a virtual roundtable titled “Decoding the New Standard”, where finance ministers, AI ethicists, and token economists discussed whether we’re moving toward a digital Bretton Woods — a new global economic order shaped not by gold or GDP, but by code, consensus, and compute power. And here’s what’s wild: for the first time, digital currency frameworks are being tied to climate performance.

Imagine this — a country’s central digital currency (like the e-euro or DUSDR) can devalue if national emissions targets are exceeded, or strengthen if carbon drawdown thresholds are met.

It’s bold. It’s technocratic. And it might be the only way to force accountability into financial systems that have ignored planetary cost for decades.

But it also introduces new instability. What happens when a wildfire — a random natural disaster — tanks your national currency? Are we creating a fairer market, or just a more volatile one?

Where Does This Leave Us?

As a young guy who loves spreadsheets, market cycles, and economic theory — I’m genuinely fascinated by what’s unfolding in 2025. We’ve spent decades saying the market is rational, self-correcting, and largely ungovernable. Now we’re watching the rise of tools that actually make it governable — but in ways that often remove the human from the loop.

We’re replacing regulators with neural nets, central banks with logic chains, and policy with predictions. It’s efficient. It’s elegant. But it’s also deeply unsettling. Because economies aren’t just data. They’re people, cultures, fears, aspirations, and irrational choices. So the real question for the second half of 2025 isn’t how smart the system gets — it’s how human we allow it to remain.

Close